Introduction

CNN are broadly employed in the computer vision, NLP and others areas. However, many redudance exists in the network. In other word, one could leverage less computing resources to finish the task without accuracy loss. Many publications come out for the simplification. One of them is model quantization.

Quantization means to regress the activation or weight to several discrete set of number. Generally, quantization implies to fix point data type. In the publications, some focus on quantization on the weights, some others focus on the quantization on activation and also focus on both. Image classification is the most usual application to verify the algorithm, other scenarios such as detection, tracking, segmentation and even super resolution also attract attentions. Here summarize one of the papers.

Other related papers could be found in model-compression-summary

How to train a compact binary neural network with high accuracy?

Tricks

-

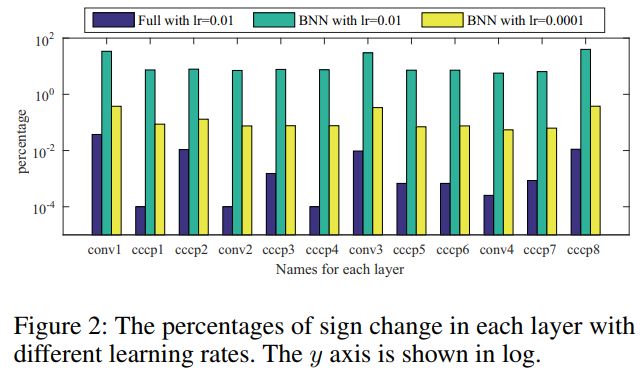

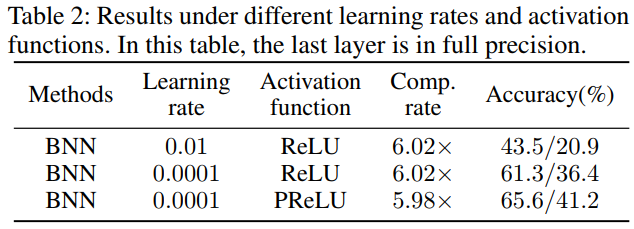

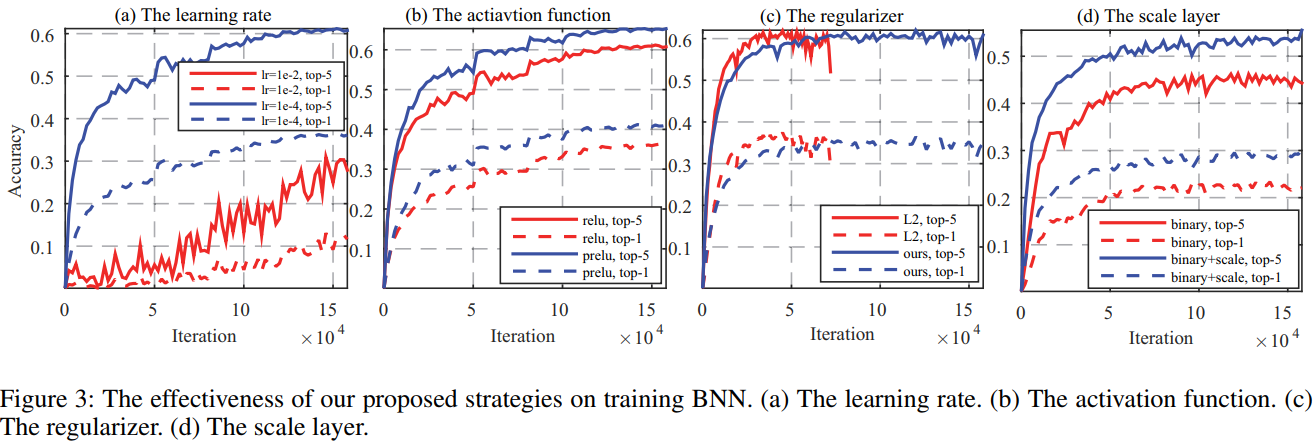

Low learning rate is perfered, starting from 5e-4 or 1e-4. They found too big lr cause too much parameter flip during training.

-

scale of weight and activation is not required, but use PRelu instead ReLU for better result

-

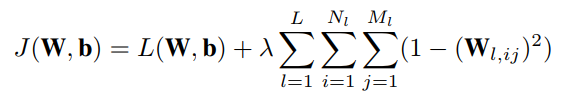

Regularization is important. rather that L2 norm, take a bipolar regularization.

-

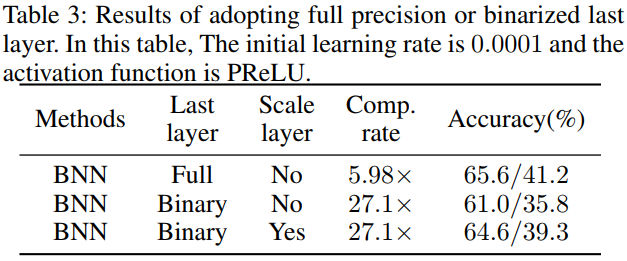

If the last layer is also to be binarized, add a scale layer before the ouput layer

-

add multiple binarization (branchs), multiple bases for better result.

Overall benefit could be see:

Thinking

The 2nd trick seems a little confilct with ‘Learning to train a binary neural network’. To think more, they may improve each other’s idea and and complementary to each other.