Introduction

CNN are broadly employed in the computer vision, NLP and others areas. However, many redudance exists in the network. In other word, one could leverage less computing resources to finish the task without accuracy loss. Many publications come out for the simplification. One of them is model quantization.

Quantization means to regress the activation or weight to several discrete set of number. Generally, quantization implies to fix point data type. In the publications, some focus on quantization on the weights, some others focus on the quantization on activation and also focus on both. Image classification is the most usual application to verify the algorithm, other scenarios such as detection, tracking, segmentation and even super resolution also attract attentions. Here summarize one of the papers.

Other related papers could be found in model-compression-summary

DeRefa-net

Highlight

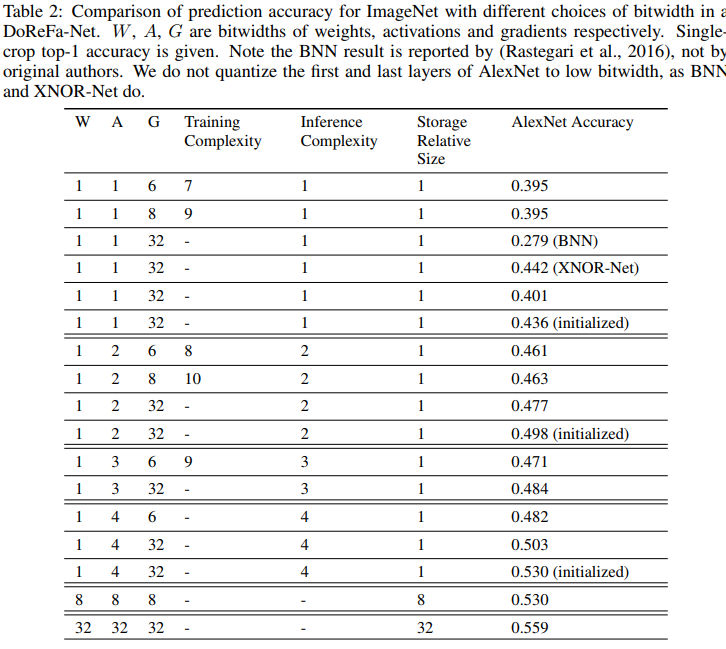

The paper proposes a quantization method with the feature of quantize the activation/weight/gradient at the same time. They try to generalize the binarized network to efficiently trained on resource limited devices. Because they quatizate the activation/weight as well as the gradient, the framework could save computing and runtime memory.

Quantization of weight

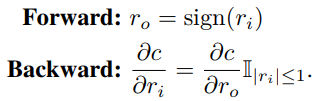

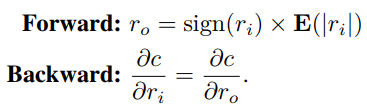

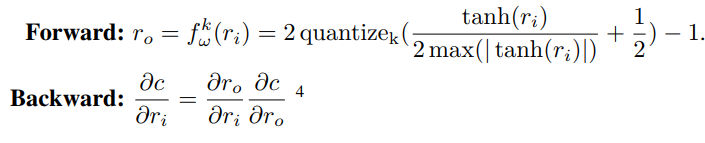

This paper use the following forward and backward function for quantized weight. Unlike the XNor-Net, it employs a constant scale for the binarized weight (In XNor-net, the scale is calculated by the mean of abs of the weight).

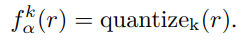

For binary weight:

For other precision weight (constrained with the tanH function):

Quantization of activation

The quantization of activation is easy, because the dynamic range of the activation is general fixed by normalization (such as BN layer).

Quantization of gradient

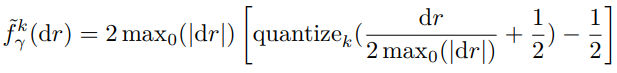

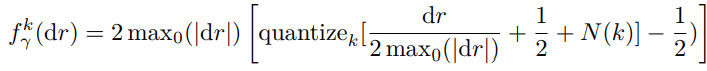

We could not regress the range of the gradient. Thus, we could not employ the quantization function of the activation. The function is similar with the quantization function the weight but without tanH regression. The scale is calculated for each sample.

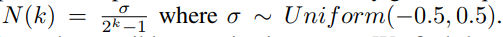

Besides, an important trick is to add some noise into the quantization function to increase robust.

Other tricks

- do not quantize the first and last layer

- fusion adhere layers to save runtime memory cost

- use small learning rate(1e-4), and decrease to 1e-6.

- use Adam optimizer.

- try to initilize the low precision network with pre-trained full precision network.

result