Introduction

CNN are broadly employed in the computer vision, NLP and others areas. However, many redudance exists in the network. In other word, one could leverage less computing resources to finish the task without accuracy loss. Many publications come out for the simplification. One of them is model quantization.

Quantization means to regress the activation or weight to several discrete set of number. Generally, quantization implies to fix point data type. In the publications, some focus on quantization on the weights, some others focus on the quantization on activation and also focus on both. Image classification is the most usual application to verify the algorithm, other scenarios such as detection, tracking, segmentation and even super resolution also attract attentions. Here summarize one of the papers.

Other related papers could be found in model-compression-summary

Relaxed Quantization for Discretized Neural Networks

paper link

Comment by reviewer

Highlight

I prefer this paper very much. It’s both practial and sound in theory. In one sentence, it solve the non-derivative (non-differentiability) issue during quantization by employing probabilistic model. Early published papers abou the stochastic rounding deployment in low precision training could also be treated as a special case of the proposed approach.

Method

-

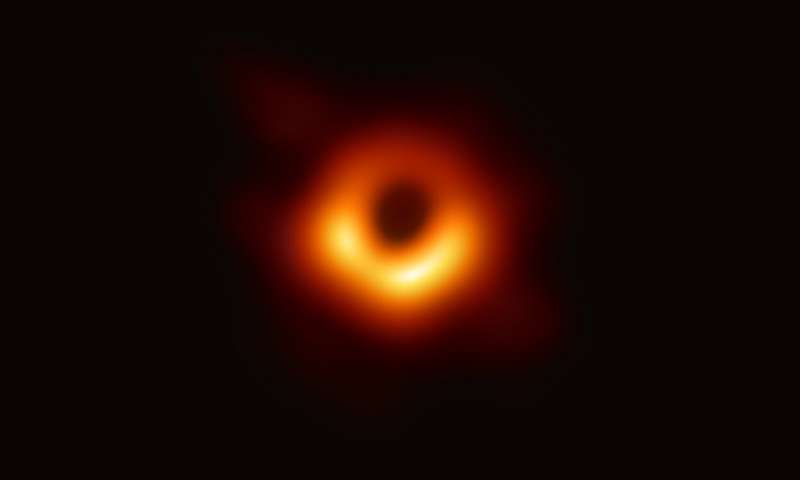

The following figure gives a general quantization procedure. The problem of it is information is lost and non-invertible. It is not easy to optimize the network with gradient descent.

-

vocabulary choose. The paper gives good explain.