Introduction

CNN are broadly employed in the computer vision, NLP and others areas. However, many redudance exists in the network. In other word, one could leverage less computing resources to finish the task without accuracy loss. Many publications come out for the simplification. One of them is structure research.

Mobilenet v1 is a publication from Google. It designed a new network architecture with less parameters and operations but archive similar accuracy with famous bone networks, such as VGG, Resnet. It now has became a new baseline for network simplifaction. The main idea is to replace normal 3*3 conv with combination of depth-wise seperate conv and point conv.

Mobilenet v1

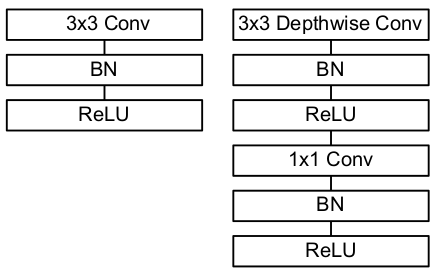

The network architecture change:

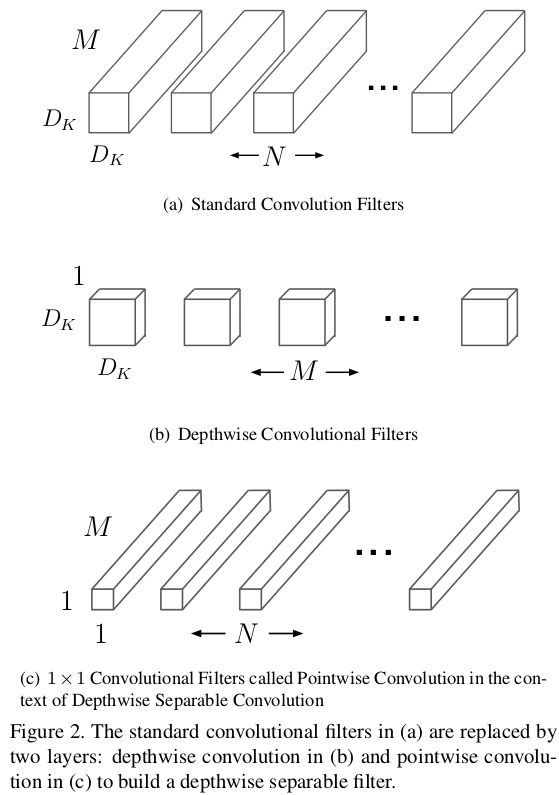

The illustration of the depth-wise seperate conv and point-wise conv:

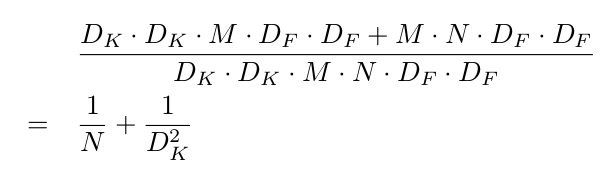

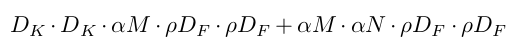

And the complexity reducation by the new desinged structure:

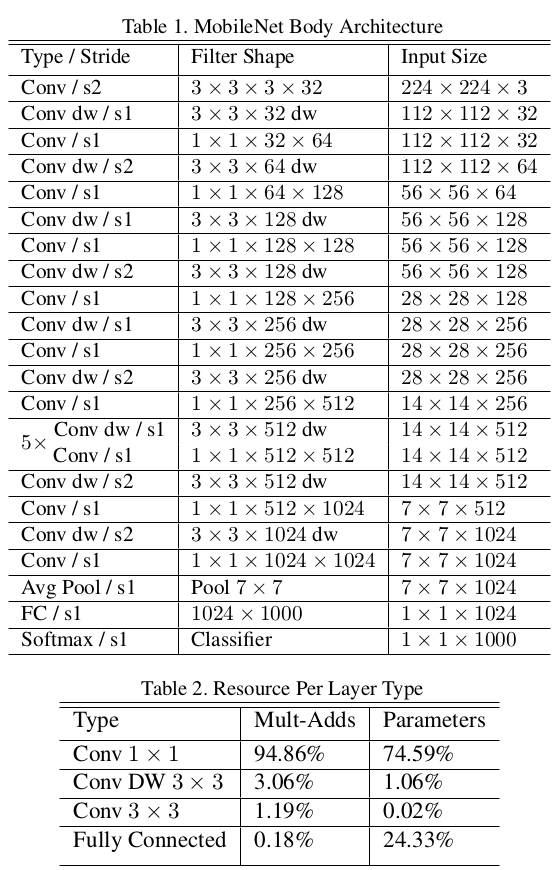

The overall network structure of mobilenet v1:

There are two hyperparameters to adjust fine-grain complexity. The alpha to adjust the channel width and the pie to adjust the resolution. The complexity is square related with these two hyperparameters.

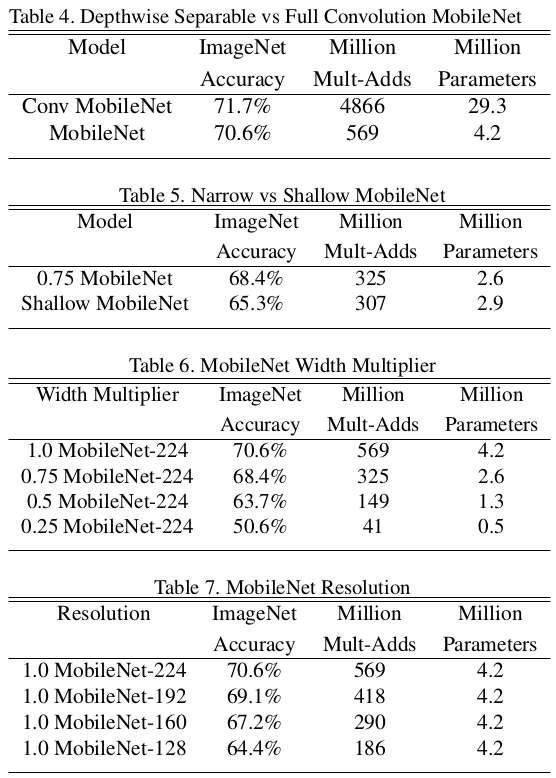

Performance on Imagenet2012:

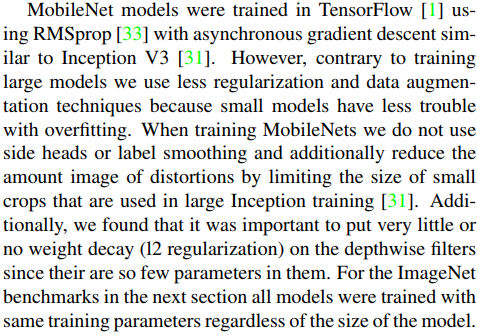

It could be see that the top1 accuracy on imagenet is 70.6% for mobilenet-v1. If not using the depth-wise + point-wise conv to replace the normal conv, the accuracy is 71.7%. To obtain the accuracy from the paper, it seems the only way is employing the TF framework. I’ve never see someone to get the same accuacry with Pytorch. The author advised RMSprop optimazier and no weight decay on depth-wise layer:

Code

One of the great work with pytorch framework is here.

problem with mobilenet v1

The depthwise-conv is easily to get zero gradient during training, so call ‘degradation’ problem. Mobilenet v2 gives some explain and solution.

More comparason could be found in this discussion.

Blogs

The following blogs also give great explain/experiment analysis/comparason of mobilenet v1/v2.

1.https://zhuanlan.zhihu.com/p/50045821

2.https://perper.site/2019/03/04/MobileNet-V2-%E8%AF%A6%E8%A7%A3/